Jen bought me an Apple Watch for my birthday a few years ago. I have been meaning to write a short review for a while now, as today is the fourth anniversary of the original model being launched I thought it was a good day to publish it! I always prefer reading these long term reviews, to the usual short preview as a product is launched. I’m not a professional technology review, just a geek with a blog, so for a really detailed look check out DC Rainmaker’s review.

My watch is a non-cellular 42mm Apple Watch Series 3 in space grey, it came with a grey sport band. When Jen took me to the Apple Store, to refine the Apple Watch hints I’d dropped, I couldn’t get on with the sport band at all, so I told Jen I wasn’t fussed between the black or grey, as I planned to replace it straight away. However once I had the watch I quickly got used to the strap and probably would have preferred the black sports band. I have since bought a black sport loop – which has become my main strap, unless I am swimming, out in the rain or dealing with Henry, who is sicking up a lot of milk at the moment.

I had considered the cellular versions of the watch, but I didn’t think it would be worth the extra cost, both the purchase cost and the £5 per month service charge. I also actually prefer the look of the watch without the red dot on the crown, which signifies the cellular versions. The Apple Watch 4 solution of just a red ring looks a lot nearer. It is just a shame that the sport loop wasn’t available with the basic watch, only the cellular version, again this has been remedied with the new version – kudos to Apple for sorting these niggles.

When the original Apple Watch was announced, I wasn’t interested in it at all. I had (and still have) a couple of nice automatic watches and a Casio G-Shock, for when a more rugged watch was needed. Even though I considered myself more of a geek than a watch guy, I couldn’t see me wearing an Apple Watch rather than my other watches. Although I did appreciate some of the details and nods to traditional watches on the Apple Watch.

Fast forward a few years, Owen had been born, Jen was looking to get her fitness back and Apple had added GPS to the Series 2 Apple Watch, making it a much better prospect for a fitness watch. In addition to the fitness features I could see that having iPhone notifications on her wrist would be handy whilst wrangling a now wriggling Owen. So I took a flyer and bought Jen an Apple Watch Series 2 for her birthday. Much like when I’d bought her an iPad a few years before, it quickly became an essential device. This was very apparent when Jen forgot her watch charger when we went to Croyde and we had to ask her parents to bring it down when they joined us.

Shortly after Apple announced the Series 3 Apple Watch, now with a barometric altimeter, I was noticing some strange height results on my Strava. Things like gaining more altitude on short local rides, than when I’d been slogging uphill on longer rides at trail centres. This combined with seeing how useful Jen was finding her watch made me reconsider my view, so I started dropping hints for my birthday.

The fitness features, especially Strava, were my main reason for wanting an Apple Watch and I can safely say that my expectations were blown away! I would have been happy just using it with Strava to record my bike rides, but it is the off the bike fitness where it excels. The “three rings” concept, really encourages you to hit three different fitness goals each day – stand for at least a minute in an hour for twelve hours of the day, do at least thirty minutes of exercise and burn a predetermined number of calories (400 for me) by moving around. These daily goals are backed up with awards things like hitting goals on consecutive days, or doubling the move calorie targets. These targets are especially addictive, on more than one occasion I have found myself doing press ups before bed to continue a move streak, or getting up and going for a walk when the Watch reminds me that I’ve been sitting down for too long. I have however noticed oven the last six months or so that it has become a lot easier to hit my 400 calorie target – my Apple Watch wearing friends have also experienced this. I like to think we are getting fitter, or moving around more, but I expect that someone at Apple has modified the code.

I also use my Watch to track my sleep, it mostly confirms what I already knew, I’m a deep sleeper, but could do with going to bed a wee bit earlier. I also like the “Breathe” feature, although it always seems to prompt me to breathe worst moment. I don’t know what, if any, logic is behind these alerts.

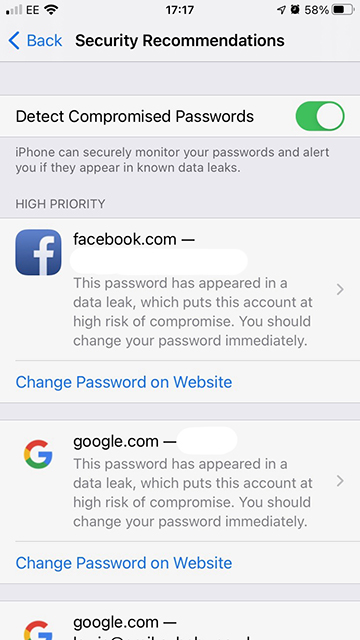

The Watch includes a heart rate sensor, which has opened up a whole new load of data for me, especially during bike rides. On the other hand, too much data can be a bad thing! On a few occasions I have woken up to an alert on my Watch telling me it detected an abnormally high heart rate whilst I was asleep. This has led to various medical checks, none of which have found anything. So either there is a problem with the heart rate sensor on my Watch, or I have a rare/very occasional heart problem. I ordered a Wahoo Tickr heart rate monitor, which is on a chest strap, to help me rule out any problems with the Watch, but of course the issue has not reoccured. I now use the Tickr paired to my Watch to monitor heart rate on longer bike rides, as chest straps are meant to me more accurate than the optical sensors as used on the Watch.

Aside from fitness tracking I also use my Watch to preview notifications from my iPhone. I find it much easier to glance at my wrist to see a snippet of information, rather than taking my iPhone out of my pocket. Notifications from Apple apps, such as iMessages or email work great, you can usually see what the control the message is and give a brief response. However third party apps are a bit more hit and miss. For basic Siri tasks, such as setting a timer, it is much easier to use the Watch. I also find it useful on the bike, where I would usually need to remove my gloves to use my iPhone, I can send messages or even make and receive phone calls using Siri, whilst riding along! And Apple Pay – I doubt I will ever tire of being able to pay for things with my Watch.

The way the Watch and the iPhone hand off notifications to each other works seamlessly, which is actually frustrating for me as an owner of multiple Apple devices – if my Watch and iPhone can work that closely together why do I still get so many duplicated alerts on my Macs? Hopefully this is something Apple will work on in the future.

The only other problem I have with the Apple Watch is that I hardly ever wear my other watches these days. The Apple Watch integrates with my life so well that my mechanical watches rarely get worn. Sometimes I wonder if the stand goal is really to make sure that you are wearing your Apple Watch for at least twelve hours a day, rather than any other watch… I occasionally force myself to wear my mechanical watches, usually on special occasions and still love the amazing detail in the mechanisms, but I have been caught out trying to pay for shopping with them. The watch that has suffered the most is my G-Shock 5600, it used to be my daily watch, the only watch I would take when travelling etc but is neither as useful as the Apple Watch, nor as special as my technical watches. As I was writing this blog I took it out of my watch box and realised the battery was showing “low”, in the years I wore it was always on “high”, fortunately a few days on the windowsill recharged the battery for another few years.

On the subject of charging, when I first got the Apple Watch I charged it overnight, every night. If I forgot I could get two days use from one charge. These days I charge the Watch while I am getting changed, or having a shower – as it is only a small battery, it does not take long to charge at all.

To conclude, out of all the gadgets I have owned the Apple Watch fitted in to my life and made itself an essential item for me quicker than anything else. If I broke/lost it I would replace it without a doubt. It also makes me wonder what will happen to the luxury watch industry. I am usually a big fan of heritage and simplicity, but am now rarely found without my Apple Watch on my wrist.